The nova-docker project described here is unmaintained and has been retired. Please see the documentation on the Zun service for an alternative solution.

- 1Overview

- 2Configure an existing OpenStack installation to enable Docker

- 3Configure DevStack to use Nova-Docker

This is a quick post to explain that by default Docker does not need hardware virtualization (VT-X). Is Docker a Virtualization? In a sense of allowing you to run multiple independent environments on the same physical host, yes. See full list on etherealmind.com.

Overview

Oh, I just turned VT-d on in my bios, but it still shows the same message (FAIL: Hypervisor framework fails.) I use opencore 0.6.2 and my bulid: Mobo:Asus prime 250m-plus. GPU:Asus RX 590. I think it is config.plist file that still keep VT-d off cause I set DisableIoMapper. Docker Desktop for Windows: No hypervisor is present on this system. Ask Question Asked 3 years, 7 months ago. Active 1 year, 2 months ago. Viewed 24k times.

The Docker driver is a hypervisor driver for Openstack Nova Compute. It was introduced with the Havana release, but lives out-of-tree for Icehouse and Juno. Being out-of-tree has allowed the driver to reach maturity and feature-parity faster than would be possible should it have remained in-tree. It is expected the driver will return to mainline Nova in the Kilo release.

Docker is an open-source engine which automates the deployment of applications as highly portable, self-sufficient containers which are independent of hardware, language, framework, packaging system and hosting provider.

Docker provides management of Linux containers with a high level API providing a lightweight solution that runs processes in isolation. It provides a way to automate software deployment in a secure and repeatable environment. A Docker container includes a software component along with all of its dependencies - binaries, libraries, configuration files, scripts, virtualenvs, jars, gems, tarballs, etc. Docker can be run on any x64 Linux kernel supporting cgroups and aufs.

Docker is a way of managing multiple containers on a single machine. However used behind Nova makes it much more powerful since it’s then possible to manage several hosts, which in turn manage hundreds of containers. The current Docker project aims for full OpenStack compatibility.

Containers don't aim to be a replacement for VMs, they are complementary in the sense that they are better for specific use cases.

What unique advantages Docker bring over other containers technologies?

Docker takes advantage of containers and filesystem technologies in a high-level which are not generic enough to be managed by libvirt.

- Process-level API: Docker can collect the standard outputs and inputs of the process running in each container for logging or direct interaction, it allows blocking on a container until it exits, setting its environment, and other process-oriented primitives which don’t fit well in libvirt’s abstraction.

- Advanced change control at the filesystem level: Every change made on the filesystem is managed through a set of layers which can be snapshotted, rolled back, diff-ed etc.

- Image portability: The state of any docker container can be optionally committed as an image and shared through a central image registry. Docker images are designed to be portable across infrastructures, so they are a great building block for hybrid cloud scenarios.

- Build facility: docker can automate the assembly of a container from an application’s source code. This gives developers an easy way to deploy payloads to an OpenStack cluster as part of their development workflow.

How does the Nova hypervisor work under the hood?

The Nova driver embeds a tiny HTTP client which talks with the Docker internal Rest API through a unix socket. It uses the HTTP API to control containers and fetch information about them.

The driver will fetch images from the OpenStack Image Service (Glance) and load them into the Docker filesystem. Images may be placed in Glance by exporting them from Docker using the 'docker save' command.

Older versions of this driver required running a private docker-registry, which would proxy to Glance. This is no longer required.

Configure an existing OpenStack installation to enable Docker

Installing Docker for OpenStack

The first requirement is to install Docker on your compute hosts.

In order for Nova to communicate with Docker over its local socket, add nova to the docker group and restart the compute service to pick up the change:

You will also need to install the driver:

You should then install the required modules

You may optionally choose to create operating-system packages for this, or use another appropriate installation method for your deployment.

Nova configuration

Nova needs to be configured to use the Docker virt driver.

Edit the configuration file /etc/nova/nova.conf according to the following options:

Create the directory /etc/nova/rootwrap.d, if it does not already exist, and inside that directory create a file 'docker.filters' with the following content:

Glance configuration

Glance needs to be configured to support the 'docker' container format. It's important to leave the default ones in order to not break an existing glance install.

Using Nova-Docker

Once you configured Nova to use the docker driver, the flow is the same as anyother driver.

Only images with a 'docker' container format will be bootable. The image contains basically a tarball of the container filesystem.

It's recommended to add new images to Glance by using Docker. For instance, here is how you can fetch images from the public registry and push them back to Glance in order to boot a Nova instance with it:

Then, pull the image and push it to Glance:

NOTE: The name you provide to glance must match the name by which the image is known to docker.

You can obviously boot instances from nova cli:

Once the instance is booted:

You can also see the corresponding container on docker:

The command used here is the one configured in the image. Each container image can have a command configured for the run. The driver does not usually override this. You can image booting an apache2 instance, it will start the apache process if the image is authored properly via a Dockerfile.

Configure DevStack to use Nova-Docker

Using the Docker hypervisor via DevStack replaces all manual configuration needed above.

Note: below, localadmin admin user, adjust to suit your configuration

Install the latest Docker release

Ubuntu:

Fedora:

Prepare Nova-Docker

Set up Devstack

Clone devstack (it is recommended to use the same releases of devstack and nova-docker, e.g., stable/kilo, master, etc.)

Before running DevStack's stack.sh script, configure the following options in the local.conf or localrc file:

Configure nova to use the nova-docker driverNote: neutron is the default as of kilo

Start Devstack

./stack.sh

Testing Nova-Docker

Copy the filters

Start a Container

Assign it a floating IP and connect to it

Configure DevStack to use Nova-Docker (alternate post-stack method)

Using the Docker hypervisor via DevStack replaces all manual configuration needed above.

Install Docker, then install Devstack and run stack.sh

Once stack.sh completes, run unstack.sh from the devstack directory

Install nova-docker:

Prepare DevStack:

Run stack.sh from devstack directory:

It may be necessary to install a Docker filter as well:

Resources

- Lars Kellogg-Stedman; Installing Nova-Docker with Devstack (blog post)

Community

We have a Nova Subteam and involvement of various contributors may be verified via Github's contributors page.

The Docker team is also involved with the more generic and highly-overlapping efforts of the Nova Containers Sub-team.

We are available on IRC on Freenode in #nova-docker. The containers team may be found in #openstack-containers.

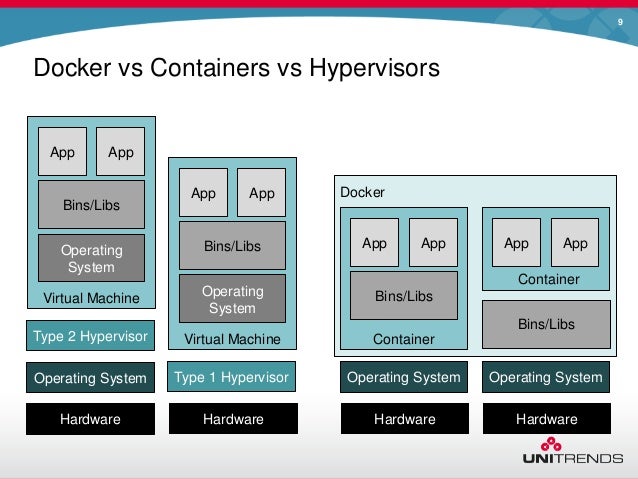

Containers virtualize at the operating system level, Hypervisors virtualize at the hardware level.

Hypervisors abstract the operating system from hardware, containers abstract the application from the operation system.

Hypervisors consumes storage space for each instance. Containers use a single storage space plus smaller deltas for each layer and thus are much more efficient.

Containers can boot and be application-ready in less than 500ms and creates new designs opportunities for rapid scaling. Hypervisors boot according to the OS typically 20 seconds, depending on storage speed.

Containers have built-in and high value APIs for cloud orchestration. Hypervisors have lower quality APIs that have limited cloud orchestration value.

There are many Linux container technologies but they all operate using the same principles of isolating an application space within an operating system.

LXC is a userspace interface for the Linux kernel containment features.

Through a powerful API and simple tools, it lets Linux users easily create and manage system or application containers.

Although Docker now uses its own container format called libcontainer or other container such as Google containers in LMCTFY – Github/google/lmctfy

Docker is software tool chain for managing LXC containers. This seems to be conceptually similar to the way that vSphere vCenter manages a large numbers of ESXi hypervisor instances. In operation it is very different and much more powerful.

Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications. Consisting of Docker Engine, a portable, lightweight runtime and packaging tool, and Docker Hub, a cloud service for sharing applications and automating workflows, Docker enables apps to be quickly assembled from components and eliminates the friction between development, QA, and production environments. As a result, IT can ship faster and run the same app, unchanged, on laptops, data center VMs, and any cloud. – What Is Docker? An open platform for distributed apps

Processes executing in a Docker container are isolated from processes running on the host OS or in other Docker containers. Nevertheless, all processes are executing in the same kernel. Docker leverages LXC to provide separate namespaces for containers, a technology that has been present in Linux kernels for 5+ years and considered fairly mature. It also uses Control Groups, which have been in the Linux kernel even longer, to implement resource auditing and limiting. – Docker: Lightweight Linux Containers for Consistent Development and Deployment | Linux Journal

However, as you spend more time with containers, you come to understand the subtle but important differences. Docker does a nice job of harnessing the benefits of containerization for a focused purpose, namely the lightweight packaging and deployment of applications. – Docker: Lightweight Linux Containers for Consistent Development and Deployment | Linux Journal

- Docker containers have an API that allow for external adminstration of the containers. ?Core value proposition of Docker.

- Containers have less overhead than VMs (both KVM & ESX) and generally faster than running the same application inside a hypervisor.

- Most Linux applications can run in side a Docker container.

Clustering and Multiples

- Containers promote the idea of spreading applications across multiple containers.

- I think this is partly because containers tend to be resource constrained by definition but because the deployment of containers is simple.

- For example, it makes more sense to deploy HAProxy as a load balancer in a container then multiple Tomcat / Node instances fo the applications. Spreading load across many small instances is well suited to cloud architectures where the peak performance of container can be constrained by overloading/oversubscription in the cloud provider.

- Docker and its sister products don’t have any integration with legacy networking services. The use of traditional load balancers and proxies doesn’t make any sense in this system.

- Framework for container virtualization.

- Containers are Linux instances that hold applications.

- Docker is molded on the concept of shipping containers to present a standardised way of presenting

- Containers are long established practice e.g Solaris Zones and IBM LAPRs, and many more.

- But these products were relatively hard to use.

- Docker adds a wrapper around containers that makes them easy to consume. Toolchain for self-service.

- developers care about apps, operations cares about containers/hypervisors/bare metal.

- Open source means no licensing costs.

Business Process

- Developers can build app in Docker container, then ship the container to a continuous integration servers (ala Jenkins).

- Developers can packaging the application into the operating system.

- Docker provides strong APIs that allow programmatic control. I mean, the API is core the what Docker does.

- Probably not optimal to use Puppet or Chef to build a Docker image

- Some people use Ansible to automate Docker. Ansible has some popularity with networking for its support for device specific features.

Workflow

- there are thousands of images that are pre-packaged by Docker.

- Most images are based on Ubuntu.

- Docker orchestration platforms are important for more sophisticated uses like resilience, fault tolerance, scaling etc through intelligent container placement in the infrastructure. (Approx. similar to cloud orchestration for hypervisor placement in OpenStack).

- Orchestration systems like Decking, New Relic’s Centurion and Google’s Kubernetes all aim to help with the deployment and life cycle management of containers.

- There are many many more.

- Docker base images will have ‘layers’ of application added to the base image. The Union File System means that only the delta of the layer is added to the image. This dramtically reduces the space consumed by the operating system – its the same in every VM, why keep duplicating it in each VM (and then have the storage array deduplicate it).

- has its own storage system for the delta image. Currently Union File System, other options exist. And has been the subject of some strong debate. The root file system has everything needed to mount the Docker image.

- every time you make a change to the Docker image, then a new layer is created and marked read-only. This delta from the underlying Docker image reduces storage consumption considerably.

- there are many layers possible for each delta from the underlying Docker image.

- Docker images are built from a base image, then

- Docker can run baremetal or inside a hypervisor.

- LXC uses a Linux feature “control groups” which has the desirable side effect of provide deep insight into container resource consumption.

Linux Containers rely on control groups which not only track groups of processes, but also expose a lot of metrics about CPU, memory, and block I/O usage. We will see how to access those metrics, and how to obtain network usage metrics as well. This is relevant for “pure” LXC containers, as well as for Docker containers.Gathering LXC and Docker containers metrics | Docker Blog

- Many websites talk about CoreOS and Docker together without highlighting the differences. Confusing.

- CoreOS is a fork of Chrome OS, by the means of using its software development kit (SDK) freely available through Chromium OS as a base while adding new functionality and customizing it to support hardware used in servers – CoreOS – Wikipedia, the free encyclopedia

CoreOS provides no package manager, requiring all applications to run inside their containers, using Docker and its underlying Linux Containers (LXC) operating system–level virtualization technology for running multiple isolated Linux systems (containers) on a single control host (CoreOS instance). That way, resource partitioning is performed through multiple isolated userspace instances, instead of using a hypervisor and providing full-fledged virtual machines. This approach relies on the Linux kernel’s cgroups functionality, which provides namespace isolation and abilities to limit, account and isolate resource usage (CPU, memory, disk I/O, etc.) of process groups. CoreOS – Wikipedia, the free encyclopedia

- CoreOS is a lightweight operating system designed to offer the minimum viable functionality as an operating system.

- I understand that this forms strong basis for deployment as the OS inside the Docker container.

- At the same time, it is suitable for hosting Docker containers. CoreOS is ‘under’ Docker and ‘inside’ it too. That might be my confusion.

- CoreOS seems to be a Linux distribution that has software packages for service discovery and configuration sharing that assist with large numbers of deployments. Useful for clustered applications.

- Fleet is an orchestration tool for CoreOS and separate from Docker which has it’s own orchestration tools.

Docker - Hypervisor Is Not Running? - Stack Overflow

- Docker has wide ranging support from established vendors. To some onlookers this seems to be fashionable but there

- VMware has made strong defensive moves to embrace Docker

- Docker is well suited to PaaS where applications are developed and deployed directly from the developement platform into containers. Many PaaS platforms offer CI/CD systems where the deployment means more than ‘live’ but also test, UAT, etc etc.

- Many existing hosting companies can easily offer Docker hosting since the requirements are a modern Linux kernel and few dependencies. I think that Docker hosting isn’t much use without orchestration tools and the user would have to provide them.

- The flock of big names around Docker including Google.

- Developers who are looking to adopt DevOps seem to have strong attraction to the product.

- Impact to operations is high with SysAdmins moving to Docker management and more valuable upstream functions integrated with the developers. That is, if PaaS systems are not used.

Docker Orchestration

There are a number of Orchestration toolchains for Docker.

Getting Started with Docker Orchestration using Fig | Docker – Fig was a tool devloped to enhance docker deployment by a online Docker hosting company (cloud) and was recently acuqired by Docker itself.

How To Install & Configure Docker On A Windows Server 2019 Hyper-V Virtual Machine

Cloud Foundry – PivotalCF can use Docker to deploy PaaS-developed applications through service broker – Docker Service Broker for Cloud Foundry | Pivotal P.O.V.

Docker Fail: Hypervisor Framework Fails

Found this video useful. The main session is about 35 minutes (and then questions which you could easily skip).